Our work on transforming non-speech captions with anchored generative models is headed to ASSETS 2025 in Denver!

Hi!👋 I am a second-year Ph.D. student in Computer Science & Engineering at the University of Michigan, where I work with Prof. Dhruv Jain in the Soundability Lab.

My research interests lie at the intersection of Human-Computer Interaction, applied AI and accessibility. I focus on developing human-AI systems that enable people with disabilities to curate and adapt multi-modal content from both real-world and media contexts, transforming it into accessible formats that align with their cognitive and sensory needs.

Recent News

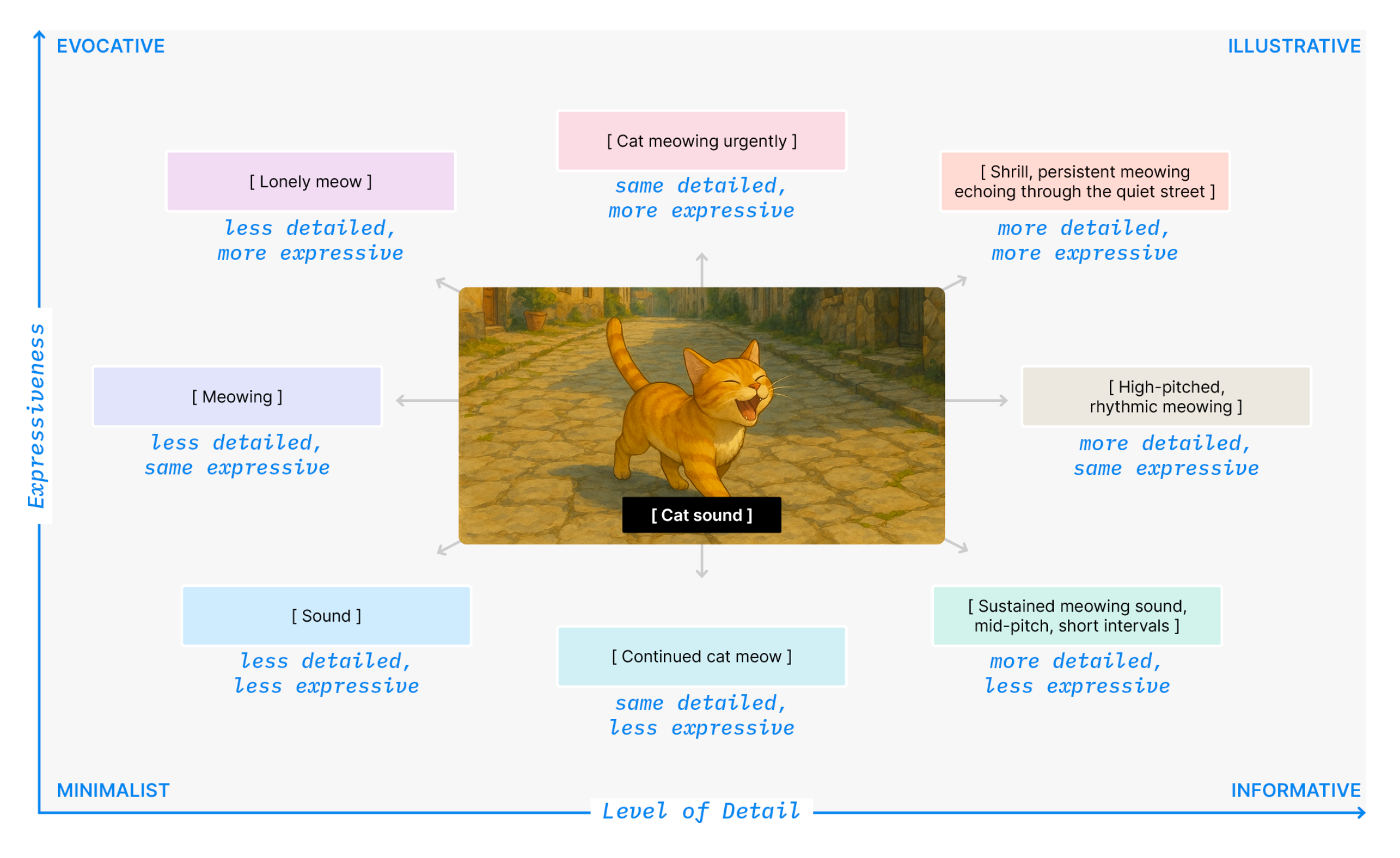

I will present SoundWeaver at CHI 2025 in Yokohama, sharing how we support real-time sensemaking of auditory environments.

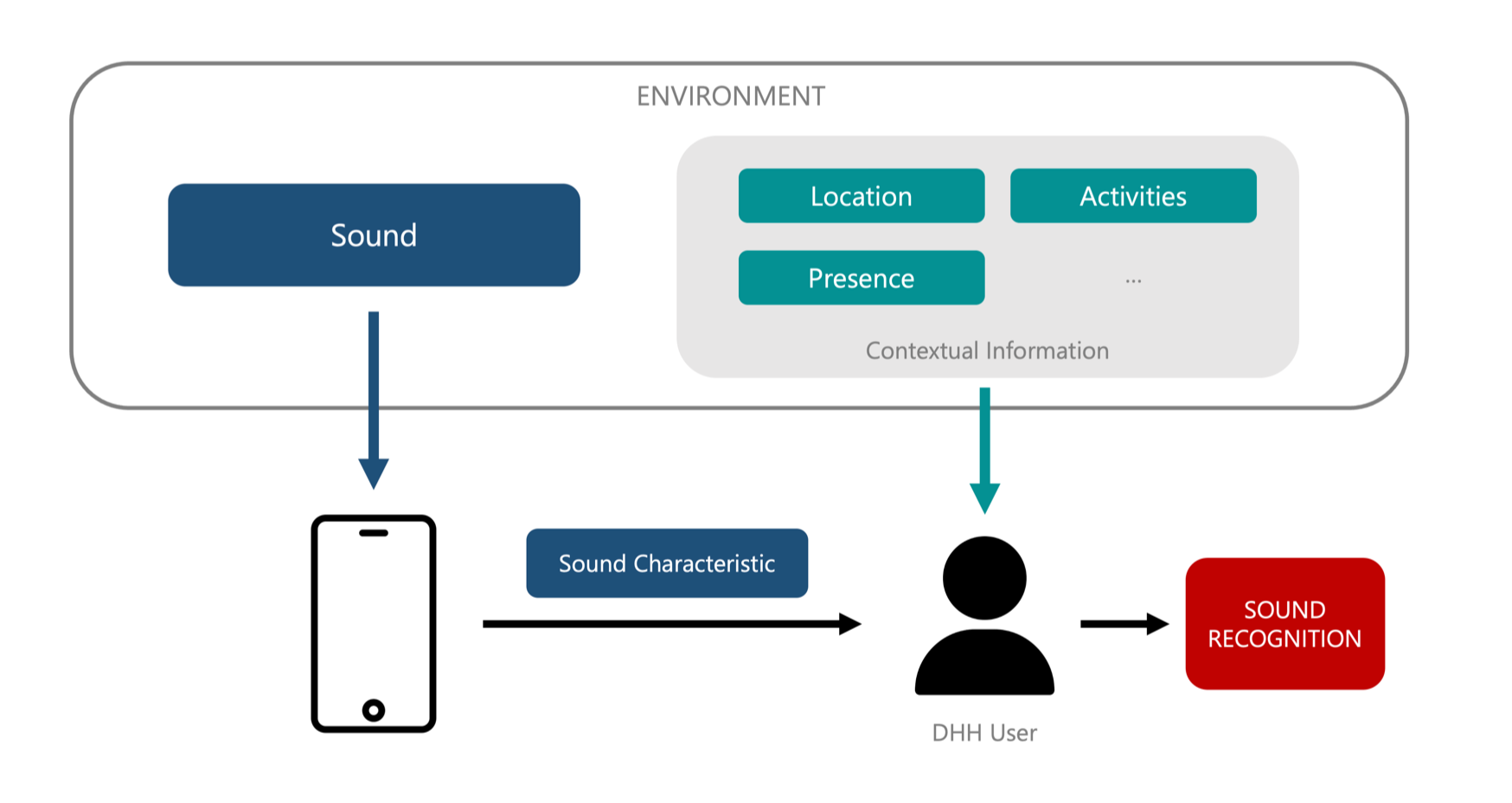

Our Human-AI Collaborative Sound Awareness (HACS) paper was accepted to CHI 2024!

Thrilled to continue my Ph.D. journey at Michigan CSE and keep building accessible technologies with the Soundability Lab.

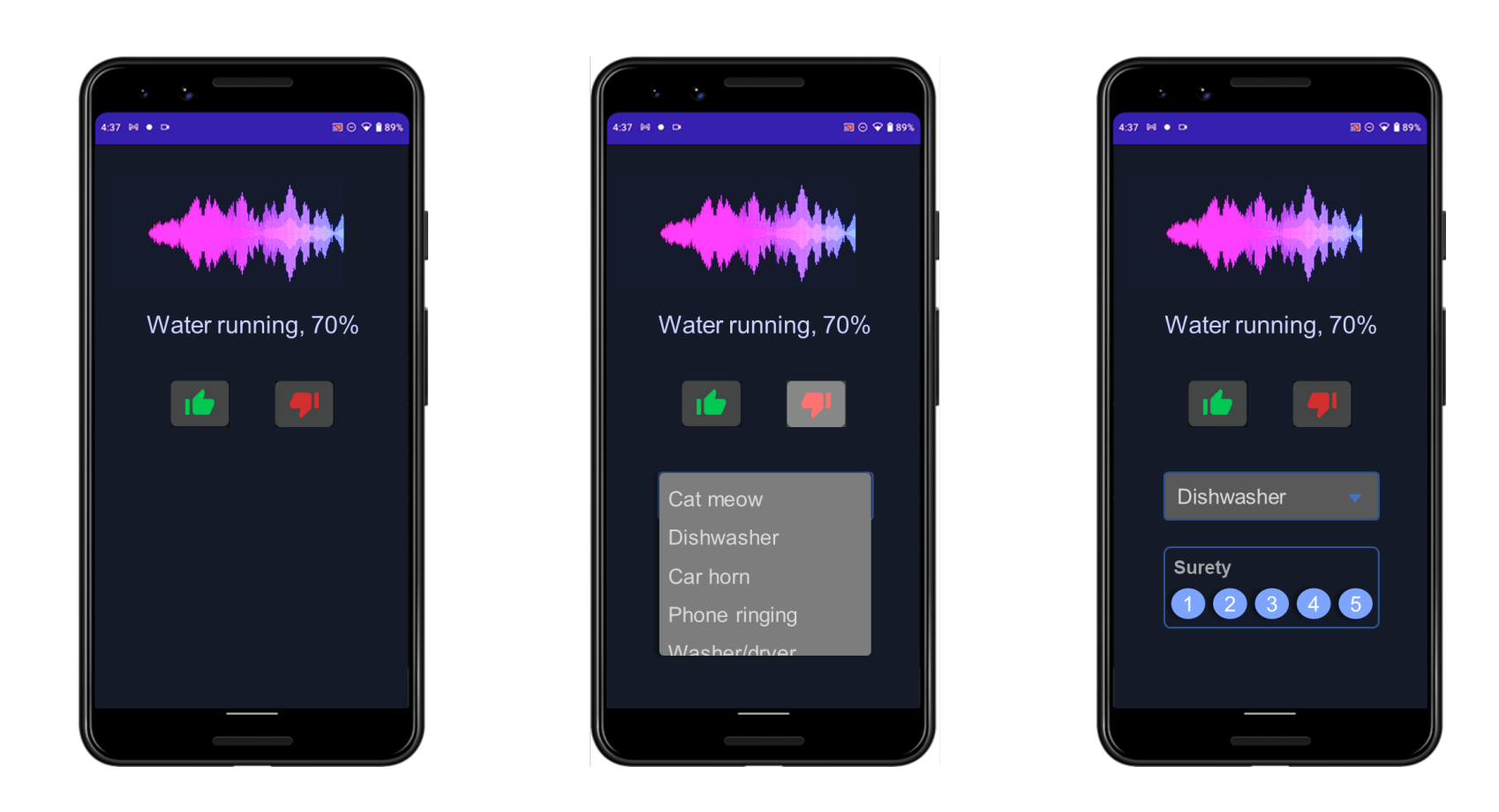

Our field study of mobile sound recognition systems received an Honorable Mention at ASSETS 2023.

Publications

Contact

I love connecting with researchers, designers, and community partners who care about creating a more accessible world. Feel free to reach out!

Find Me

Computer Science & Engineering

University of Michigan

Soundability Lab · Ann Arbor, MI